Architecting Memory Pools For HPC And AI Applications Using CXL

Over the past decade, much of the focus with machine learning has been on CPUs and accelerators, primarily GPUs but also custom ASICs, with advances in the chip architecture aimed at boosting parallel math performance. The need for speed is increasing as fast as the datasets swell and the model parameters explode, and a growing list of vendors – from longtime industry players like Intel, AMD, IBM and Google to startups like Ampere Computing, Graphcore and SambaNova Systems – are offering silicon aimed at meeting that demand.

Enterprises are looking to AI and machine learning to help them pore through the massive amounts of data they are generating to find patterns and derive useful information that they can use for everything from making better and more timely business decisions to protecting their operations from cyberattacks. It’s been a boon for commercial organizations and the machine learning space overall, which could grow from $15.5 billion last year to more than $152 billion by 2028.

However, while all those advancements in CPUs, GPUs, and custom ASICs have been good for the commercial side of the equation, what is needed for systems that will be doing HPC as well as AI is more memory capacity and more memory bandwidth.

Scientists at the Pacific Northwest National Laboratory (PNNL) in Washington state and engineers at memory chip maker Micron Technology are collaborating to develop an advanced memory architecture for these machine learning-based scientific computing workloads. The two organizations have been working for about two years on the project, which is being sponsored by the Advanced Scientific Computing Research (ASCR) program within the US Department of Energy, which funds PNNL.

“Most of those advances in silicon have been to deal with the artificial neural networks and supporting those,” James Ang, chief scientist for computing at PNNL and the lab’s project leader, tells The Next Platform. “They have to map well to GPUs. There have been advances with high bandwidth memory to help keep the chip fed with data. But the interest of ASCR is not just commercial deep learning and machine learning methods, it’s how we might integrate machine learning methods with scientific computing. This means our traditional partial differential equations and the solution of everyday equations for scientific simulations.”

Often scientists have to address such challenges as memory data usage patterns that are not an ideal fit for the memory patterns normally seen in traditional compute systems, Ang says.

“The other thing we see is the high-bandwidth memory, while it does have much faster performance, is limited in capacity and for many of our scientific simulations, we need a lot more memory,” he says. “Specifically, what’s needed on the memory side when we’re talking about scientific machine learning? We need to have heterogeneous compute nodes, compute architectures, some mix of using GPUs or maybe some other type of accelerator.”

The challenge there is that each processor, whether a CPU or GPU, has separate memory pools. What PNNL and Micron want to do is create a heterogeneous architecture that includes a unified memory pool, which Ang calls a “large shared memory core” that can be accessed by any processor – any CPU and any accelerator – via a switch, which will enable scaling for much larger systems.

“The idea is that you are still going to have close-in memory, you are going to caches for CPUs and GPUs, but it’s this third layer that’s meant to serve the needs of both the CPU and the GPUs or other accelerators,” he says.

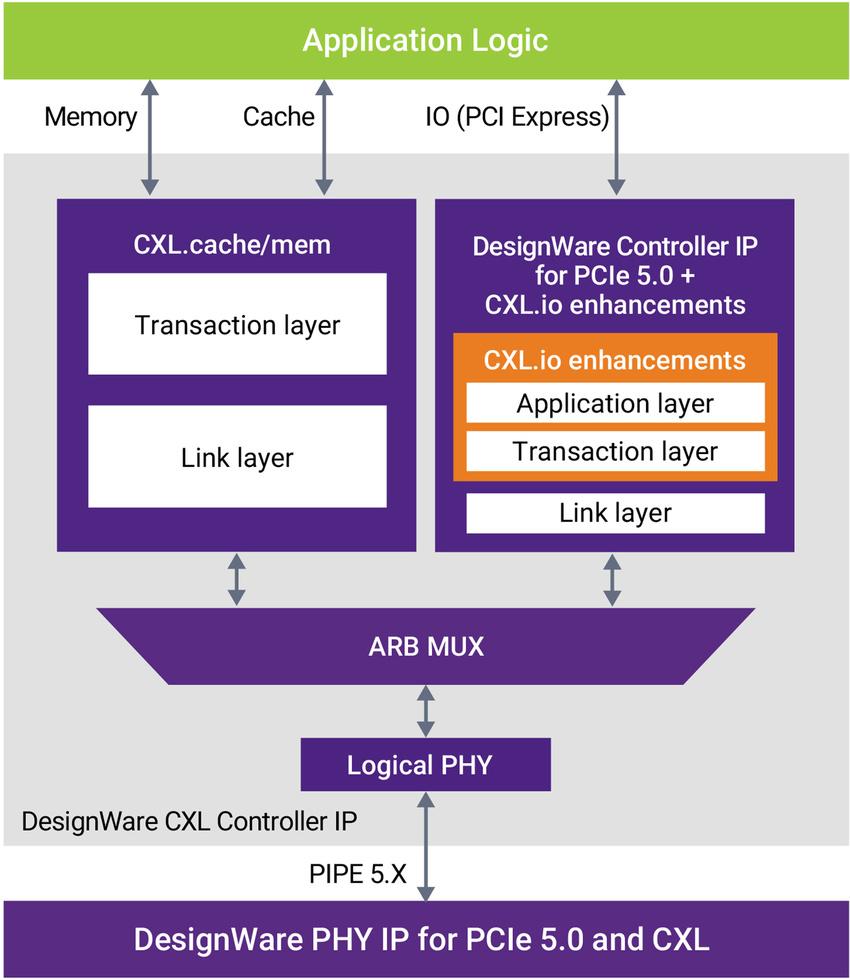

A key to this will be Compute Express Link (CXL), an open industry standard that maintains coherency between CPU memory on attached devices, enabling the sharing of resources. For this project, it puts CPUs and accelerators on equal footing when to address the shared memory core, Ang says, adding that if there are needs that CXL can’t address, PNNL and Micron will flag those for improvements in the next version.

Tony Brewer, chief architect for near data computing at Micron, says that CXL “opens the door to re-invent the memory hierarchy.” He calls memory-centric computing “the next paradigm for high-performance data analytics and simulation.”

“CXL 3.0 will enable large, scalable capacity memory attached via CXL fabric switch to a number of CPUs and/or GPUs,” Brewer says. “This style of computing is named ‘memory concentric computing,’ where large data sets are co-located with computing and shared across CPUs and GPUs to reduce access to high-latency storage. The data is accessible using standard memory semantics. However, the combination of CXL technology and the switching fabric will have latency implications. This creates motivation to move some ‘basic’ computational tasks close to the fabric-attached memory and storage to compensate for those latency implications, which could also provide the added benefit of increasing application performance and reducing energy consumption.”

PNNL and Micron are working to build a test platform for implementing compute near-memory using FPGAs. The project includes such milestones as creating specialized accelerators targeting Micron’s near-memory design for such work as scientific computing, machine learning and data analytics. Custom accelerators will be designed for irregular kernels – such as sparse and graph analytics – for large shared memory pools and parallel accelerators with memory-centric analysis. The parallel accelerators include high-throughput and dataflow-based architectures.

The organizations have a developed a “high-level synthesis design capability,” Ang says.

“We’ve been building open-source high-level synthesis tools,” he says. “We have a tool chain that can provide Verilogs that will be implemented in FPGAs and we’ve been specifically targeting the design of accelerators that support the regular applications and graph analytics applications. Those would then complement Micron’s designs for other compute capabilities that are near-memory. That would be specialized or customized accelerator designs that will operate on the data near-memory.”

This is all part of expanding the idea of heterogeneous computing, because that memory is still addressable by CPUs and GPUs, according to Ang.

“For this to work properly, we need to have a compiler framework,” he says. “We need to have runtime support to map our scientific and machine learning graph analytics applications onto this array of processors and accelerators. We have capabilities in the software stack to design – at least on a prototype level – the programing models to map our applications onto what is now a heterogeneous computing architecture with our shared-memory pools and CPUs, GPUs and other accelerators that we are defining.”

They’ve been working with compiler frameworks and leveraging the MLIR – Multi-Level Intermediate Representation – open standard for format and library of compiler utilities. It’s part of LLVM, a set of compiler and toolchain technologies for creating a front end for programing languages and a back end for instruction set architectures.

Other work being done includes “exploring how to share data, define which functions could be co-resident with memory and storage, what is the applications programming interface, etc.” Brewer says.

They also are standing up proof-of-concept systems so applications can be ported of applications to the new approach and understand what tradeoffs are needed to ensure the systems can reach their full potential, he says. Micron is developing the computational capability to be embedded in the CXL-based memory and storage subsystems.

“What we’re trying to specifically tackle in this project with Micron is scientific machine learning,” Ang says. “It’s not increasing the performance of the scientific simulation in a vacuum, but actually thinking about how scientific simulation gets tightly integrated and coupled to new advances in machine learning methods, artificial neural networks and so on.”

It would create an environment where algorithms are used to run simulations while the results of those simulations are looped back into the algorithms.

“The idea is that if these – let’s call these two different compute patterns – can be supported in a tightly coupled manner, where the results of machine learning training can be directly used and influence the running of high-performance computing scientific simulation, then we’ve kind of closed the loop there,” he says. “Conversely, if the output or the intermediate results of a simulation can be encoded in two layers of the artificial neural network, then we can accelerate the training process. So we’re looking for those kinds of virtuous cycles that will arise when these two models of computing are more tightly coupled and we view the memory as a way to accomplish this coupling.”

There are a number of challenges that need to be solved, Ang says.

“We need to make sure that our software tool chain integrates well with the hardware conceptual design the Micron has developed,” he says. “Micron looks to PNNL to provide input on the system software side – again, our runtime, our compiler, programming model work, and then our application drivers, so that we’re bringing to the table some of our scientific application drivers.”

The hope is that what PNNL and Micron create through this project can find its way into the wider HPC and scientific computing market.

“I don’t know that we’re adding that much more there, but the idea of running machine learning methods, leveraging what exists with that tight coupling to scientific simulations is breaking new ground,” Ang says. “Ultimately, we know that for Micron to view this as a success, what we are trying to do is bring a whole new set of application drivers to the table that might create new demand for advanced memory technology that Micron’s developing.”